SlicerVR: 3D Slicer VR Module

SlicerVR for Medical Intervention Training and Planning in Immersive Virtual Reality. Pinter C, Lasso A, Choueib S, Asselin M, Fillion-Robin JC, Vimort JB, Martin K, Jolley MA, Fichtinger G. IEEE Trans Med Robot Bionics. 2020 May;2(2):108-117.

www.SlicerVR.org (→ Kitware github)

-

“Kitware, Inc. is the lead developer of CMake, ParaView, ITK, and VTK.

We also develop special-purpose algorithms and applications...”

- the SlicerVR module is actively developed and open-source

(“BSD type”

Apache-2.0 license)

- Windows-only due to dependence on SteamVR even though

3D Slicer itself is cross-platform; Linux support experimental

- Oculus Rift, HTC Vive, Windows Mixed Reality headsets

- allows collaborative (multiperson) VR

[back to paper list]

Medical Applications of VR

- training for surgery, which has been shown to reduce surgical times and improve performance

- developing plans for surgery or other treatment (radiation, RF ablation)

- patient education (e.g., show treatment plan on their digital twin) and rehabilitation

- research and methods development: disease detection and classification, segmentation, etc.

Some Issues Specific to Medical Applications

- DICOM handling

- patient data management and protections

- objects are not just geometric but have associated medical data

- potentially very large images

- registration (alignment) of multiple images, e.g. different modalities or before-and-after scans

- connections to medical hardware, e.g. imaging systems

- code integration (Unity3D is C#, most medical imaging/robotics C/C++)

- heavy use of volume rendering, with complex transfer functions and custom shaders not available in gaming platforms

- limited visual context, no floor or sky

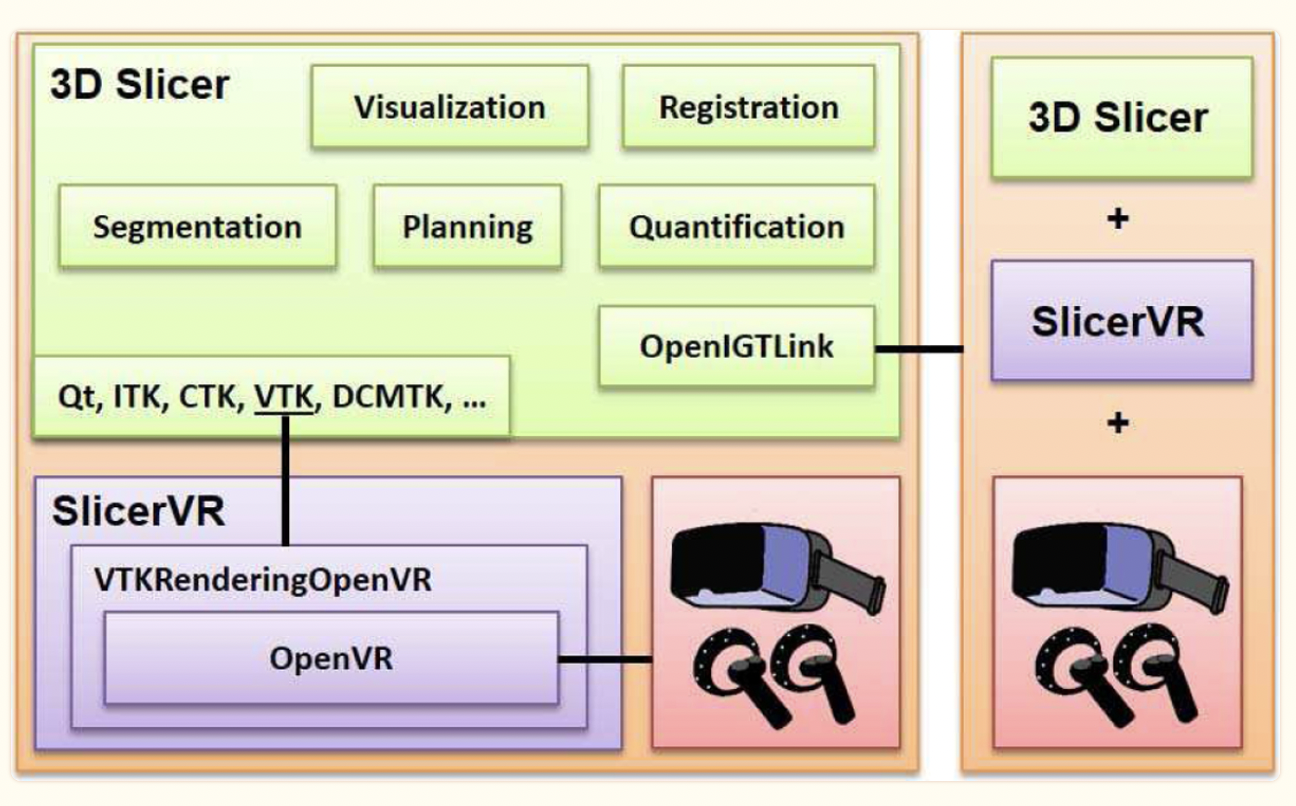

Architecture

- VTKRenderingOpenVR builds on the hardware abstraction in the OpenVR

development kit

- SteamVR provides the connection between SlicerVR and OpenVR

- OpenIGTLink synchronizes 3D Slicer instances running on

different workstations

- SlicerVR is a single-click install via the 3D Slicer Extension Manager;

the extension is built and tested nightly from the source code

in the public repository

- User populates the scene using the desktop interface and then launches VR; a single scene is shared across desktop interface and headset, and modifications in one are propagated to the other

Controlling the viewpoint

- changing body/head position

- controller “pinch 3D” mode: pressing buttons on both controllers to select two points in the scene, dragging to rotate, scale, and/or move

- flying using the touchpad of the right-hand controller

- jumping to the reference viewpoint (the view in the 3D panel of the desktop instance)

Manipulating objects within the scene (individual model transforms)

- insert the “active point” (which can be shown as a colored dot) of either controller into the object, squeeze the trigger and drag

- objects can be can be made unselectable so that they won't move, analogous to deactivation in Chimera (e.g. to move a tool while the tissue around it stays in place)

- objects such as slice planes and representations of surgical tools can be slaved to the controller transformation by attaching a “handle” to them

Other visualization

- two-sided lighting and/or additional back lights

- hide/show representations of the controller or the “lighthouses” used by certain VR systems for external position tracking of head and hands

- in progress when paper was published: “virtual widget,” a panel of VR-setting icons accessed by controller trigger in laser-pointer mode; some of these icons invoke VR-specific GUI panels of other tools (e.g., Segment Editor), if available

Preventing motion sickness

- update rate: set desired frames per second, to be enforced by adjusting the volume rendering quality

- motion sensitivity: enable reducing quality when head motion is detected

- optimize scene for VR: one-time optimization of rendering settings

to increase performance and/or enhance the VR experience, namely to:

- force using graphics card for volume rendering

- turn off display of surface-model slice intersections

- turn off surface-model backface culling so that user can see a surface from the inside

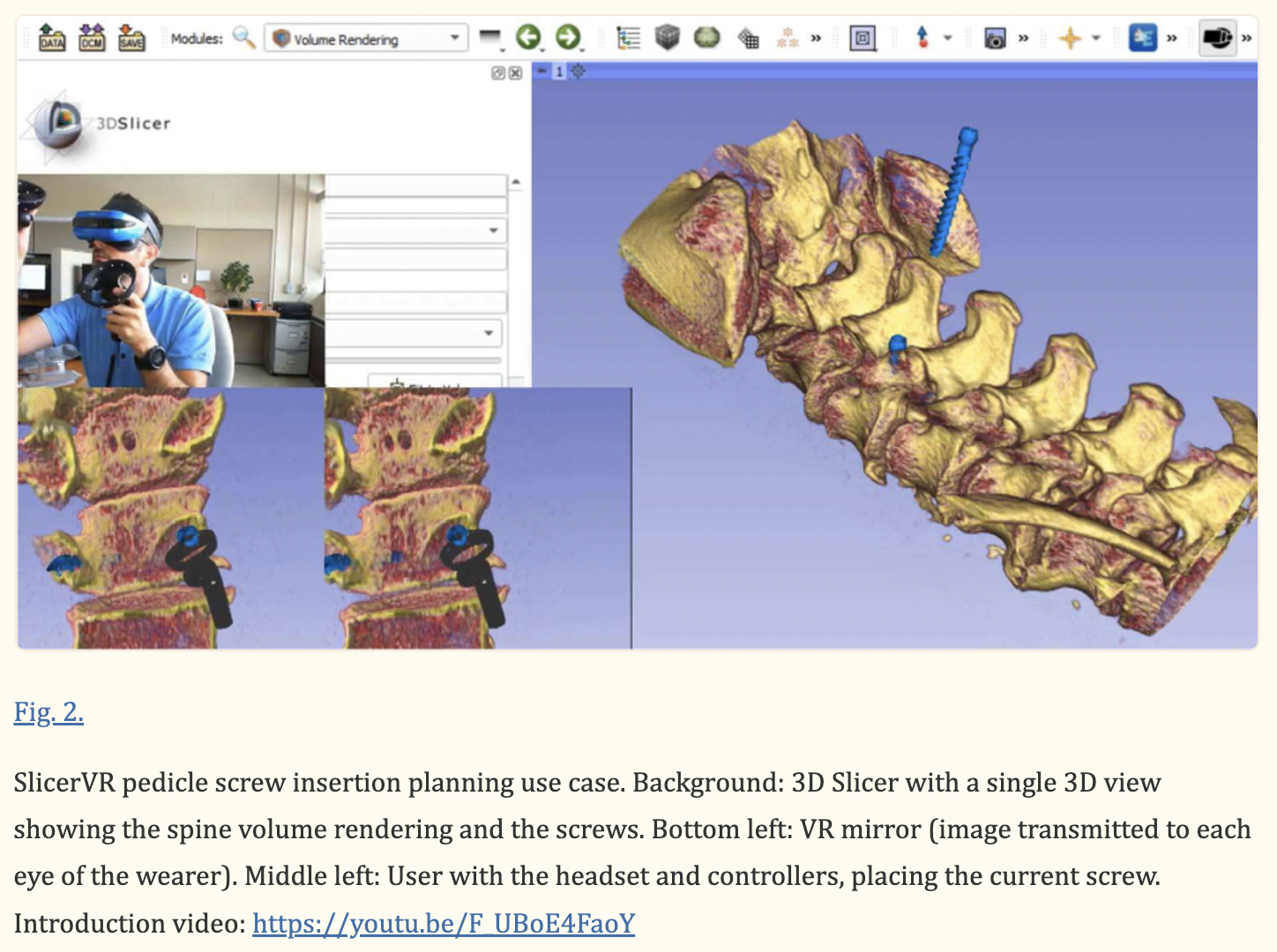

Application: Spinal stabilization planning

[video] 3m 43s

- using controllers to manipulate the scene, the slice view, and screws

- spine can be shown as volume rendering (CT series) or surface model from

prior segmentation

- the spine was made unselectable (unmovable), leaving the

screws (opened from STL) movable

- ...near the end, is a different person moving the sliders in the Slicer desktop view??

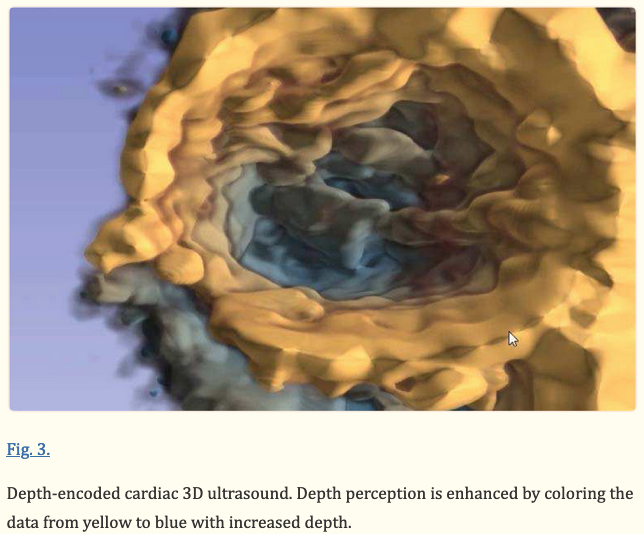

Application: Cardiac ultrasound time series

- A time series in Slicer 3D is called a “sequence”

- VR and coloring by depth aid perception of the noisy ultrasound data

as the heart beats

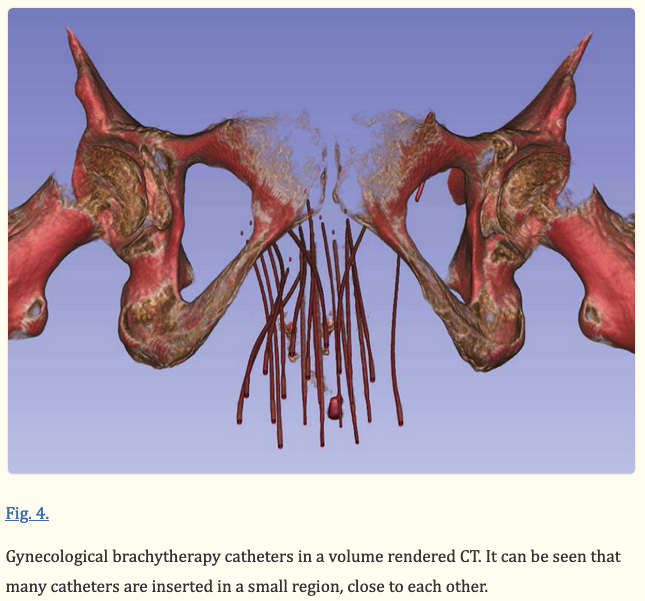

Application: Brachytherapy catheter identification

- Brachytherapy is a type of radiation therapy in which many small, highly

radioactive seeds are inserted into the treatment area through catheters

- The closely spaced catheters are hard to distinguish, and VR helps

the operator trace the precise path of each and label it individually

- After labeling, the precise dwell points of the particle in each catheter can be planned

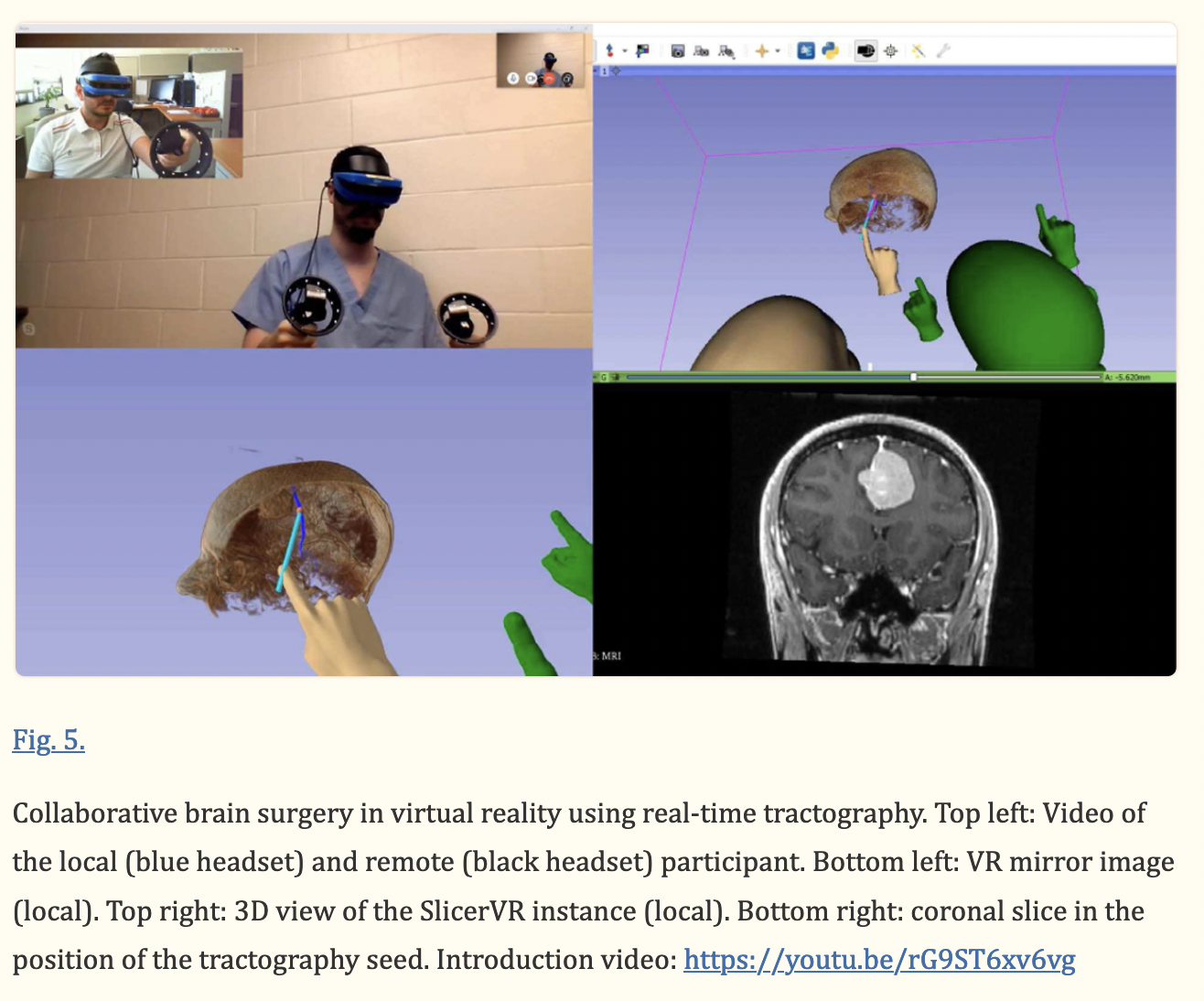

Application: Collaborative surgical planning

Local guy: blue headset, green hands;

his VR (bottom left) and desktop (right) views

Remote guy: black headset, tan head and hands

[video] 2m 29s

- two participants in separate locations (+ voice connection through

other means)

- tractography: visual represention of nerve tracts in 3D

using data from diffusion MRI

- scene contains two MRI images, T1-weighted to show the anatomy and

diffusion tensor to show the tracts, plus users' head and hand avatars

(as in real life, can't see your own head)

- the needle tip location specifies which nerve tract to display (uses SlicerDMRI extension)

Synchronization between collaborators

“In our feasibility tests no significant performance drop could be perceived while in collaboration compared to viewing the scene individually. Collaboration does not use more resources other than the additional burden of rendering the avatars if enabled, and there is no noticeable latency due to only exchanging lightweight transforms for the existing objects.”

“Broadcasting (1 to N) scenarios are also possible... in which an instructor [presents] to a group of students, who can observe the scene from a distance, angle, and magnification of their choice.”

Potential Improvements (as of 2020)

- develop VR widgets for more parts of 3D Slicer;

as of this publication, only available for Home, Data, and Segment Editor

- add “I'm lost” button to reset to a reasonable default view

- add floor/grid view to help judge distances

- “turntable”-type rotation (around user Y axis and scene center)

- augmented reality (camera pass-through showing actual surroundings)